By Abhishek Kumar | #AIWithAbhishek | #MustReadAI

The world of AI is moving fast — too fast sometimes!

But to build truly smart systems, we need to understand the roots: the papers that laid the foundation for the AI models we use today (like GPT, BERT, and more).

So, I broke down the Top 10 AI papers you should read — what they’re about, why they matter, and what you’ll learn from each.

Let’s decode them together in simple terms:

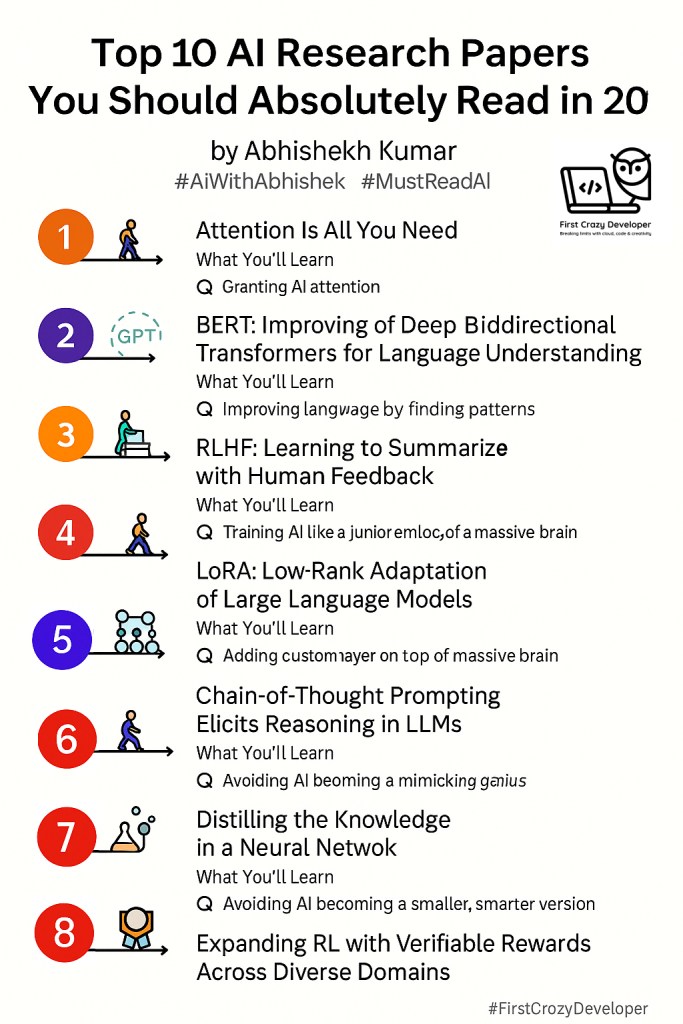

1️⃣ Attention Is All You Need

📄 Authors: Vaswani et al.

📅 Published: 2017

This is the paper that changed everything. It introduced the Transformer architecture, which powers modern LLMs like GPT, BERT, and more.

🔍 What You’ll Learn:

- Why “attention” helps models focus on relevant words in a sentence

- How Transformers replaced older RNNs and LSTMs

- It’s the foundation for ChatGPT, Bard, Gemini, and Copilot

🧠 Think of it as: Giving AI the ability to “pay attention” like a human reader.

2️⃣ BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

📄 Authors: Devlin et al.

📅 Published: 2018

BERT stands for Bidirectional Encoder Representations from Transformers. Unlike previous models that read text left-to-right or right-to-left, BERT reads both directions at once, improving understanding.

🔍 What You’ll Learn:

- How BERT became the gold standard for NLP tasks (Q&A, sentiment analysis, etc.)

- Why bidirectionality improves comprehension

- BERT’s “masked word” technique for training

🧠 Think of it as: The AI equivalent of reading with full context.

3️⃣ GPT: Improving Language Understanding by Generative Pre-Training

📄 Authors: OpenAI

📅 Published: 2018

This paper introduced Generative Pre-Training (GPT) – the idea of pretraining a model on a large dataset before fine-tuning it for specific tasks.

🔍 What You’ll Learn:

- The foundation behind the GPT series (GPT-2, GPT-3, GPT-4…)

- How unsupervised learning helps language generation

- The “predict the next word” training strategy

🧠 Think of it as: Teaching AI to write by reading the whole internet first.

4️⃣ RLHF: Learning to Summarize with Human Feedback

📄 Authors: Stiennon et al.

📅 Published: 2020

Reinforcement Learning with Human Feedback (RLHF) helps train models not just with data — but with human preferences.

🔍 What You’ll Learn:

- How RLHF makes AI outputs more human-like

- The technique used in ChatGPT fine-tuning

- Why human feedback is better than raw correctness

🧠 Think of it as: Training your AI like a junior employee—using feedback, not just facts.

5️⃣ LoRA: Low-Rank Adaptation of Large Language Models

📄 Authors: Hu et al.

📅 Published: 2021

LoRA offers a lightweight way to fine-tune huge models without retraining the entire network. It’s efficient, affordable, and modular.

🔍 What You’ll Learn:

- How to customize big models (like GPT or LLaMA) for your own use case

- Why low-rank matrix decomposition saves computing costs

- The future of personalized AI at scale

🧠 Think of it as: Adding a custom layer on top of a massive brain.

6️⃣ Retentive Network (Retention Is All You Need)

📄 Authors: Sun et al.

📅 Published: 2023

This paper extends the idea of Transformers by improving how they retain long-term context, helping them “remember” better over long sequences.

🔍 What You’ll Learn:

- How to make AI models better at processing long documents

- Alternatives to traditional attention-based memory

- The rise of Retentive Transformers

🧠 Think of it as: Giving your AI long-term memory, not just short bursts of focus.

7️⃣ Chain-of-Thought Prompting Elicits Reasoning in LLMs

📄 Authors: Wei et al.

📅 Published: 2022

This game-changing paper showed that giving examples of step-by-step reasoning in prompts helps large models think logically.

🔍 What You’ll Learn:

- Why “show your work” works for AI too

- How multi-step reasoning improves accuracy

- Prompting techniques you can use today

🧠 Think of it as: Teaching AI to solve problems like a student explaining their steps.

8️⃣ The Illusion of Thinking

📄 Authors: Bubeck et al.

📅 Published: 2023

A critical paper that questions the intelligence of LLMs, showing that they can appear smart without truly understanding.

🔍 What You’ll Learn:

- Where AI still falls short

- Why hallucination is a real problem

- The limits of pattern-matching vs true reasoning

🧠 Think of it as: Looking behind the curtain of the AI magic show.

9️⃣ Distilling the Knowledge in a Neural Network

📄 Authors: Hinton et al.

📅 Published: 2015

Introduced knowledge distillation — training smaller models to mimic larger, more powerful ones.

🔍 What You’ll Learn:

- How small models learn from big ones

- Why this technique saves memory and compute

- Its role in mobile AI and edge computing

🧠 Think of it as: Teaching a mini-me everything a genius knows.

🔟 Expanding RL with Verifiable Rewards Across Diverse Domains

📄 Authors: Google DeepMind

📅 Published: 2024

This paper pushes the limits of Reinforcement Learning by using more reliable and diverse reward systems — across different domains (health, robotics, finance, etc.).

🔍 What You’ll Learn:

- How rewards affect learning outcomes

- Scaling RL beyond games

- Safer, more general AI training

🧠 Think of it as: Making sure AI is rewarded for the right behavior in the real world.

🎓 Abhishek’s Take:

As a technical architect working with cloud + AI daily, I’ve found that reading these foundational papers not only deepens your knowledge but unlocks creative solutions to real-world problems.

Even if you’re not from a research background — these papers give you insight into how the best minds are shaping AI’s future.

📚 Want to Start Reading?

You don’t need to be a PhD to understand these papers. I recommend:

- Reading blog summaries first

- Watching explainer videos

- Trying out the models discussed (many are open-source!)

#AiWithAbhishek #FirstCrazyDeveloper #MustReadAI #AIResearch #AI #MachineLearning #GenerativeAI #LLMs #Transformers #ReinforcementLearning #TechTrends #AbhishekKumar

Leave a comment