By Abhishek Kumar | FirstCrazyDeveloper

Deploying your AI model is just the first step. The real magic happens when you fine-tune how it interacts with your users. The way you ask questions (prompts), the context you provide, and the way you guide the model’s behavior can transform average results into brilliant responses.

This process is called model optimization. Let’s break it down in simple terms.

Step 1: The Power of Prompt Engineering

Your model is like a highly intelligent assistant — but it only performs as well as the questions you ask.

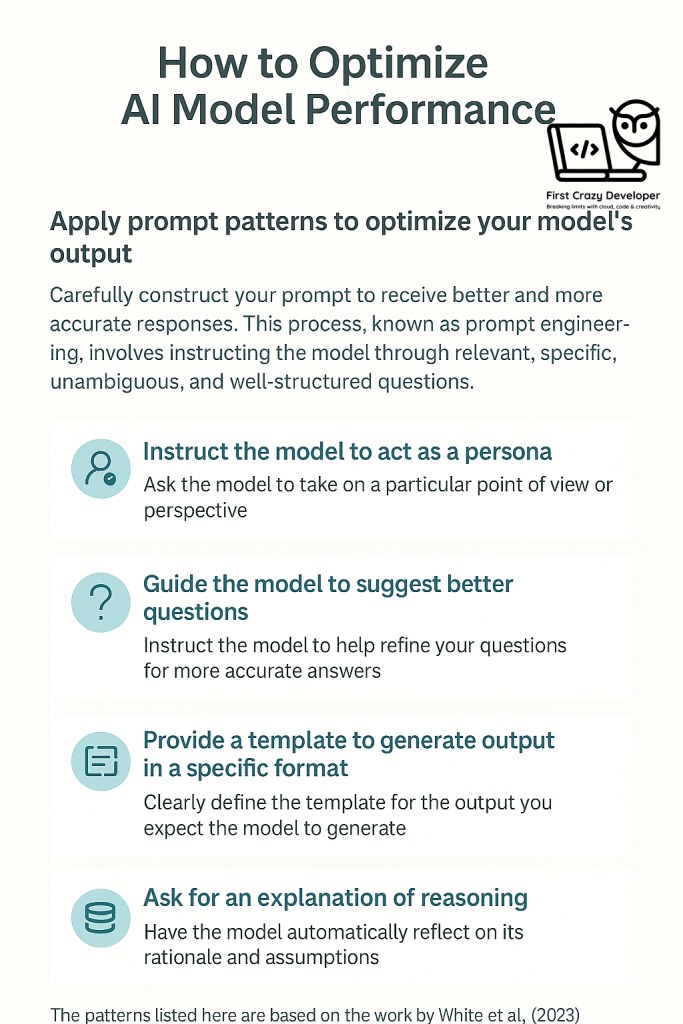

Prompt engineering is the art of crafting clear, specific, and purposeful prompts to get the best possible output from your AI model.

5 Proven Prompting Techniques

- Act as a persona:

Tell the AI who it is supposed to be.

Example: “You are a seasoned marketing professional. Write an engaging product description for a CRM software.”

This way, your model “thinks” like that persona and tailors its answer accordingly. - Ask for better questions:

Let the AI help you refine your query.

Example: “I need help planning a dinner for four. What additional questions should I answer so you can give me the best recommendations?”

The model will guide you to provide the missing context. - Specify a format:

If you want a structured answer (like bullet points, tables, or headlines), ask for it.

Example: “Summarize the 2018 FIFA World Cup final in this format: Match date, location, teams, score, and key highlights.” - Ask for reasoning:

Make the model explain its thinking. This is called chain-of-thought prompting.

Example: “Explain step by step how you solved this math problem.” - Add context:

Give your AI the background information it needs.

Example: “When should I visit Edinburgh? I want to attend Scotland’s Six Nations rugby matches.”

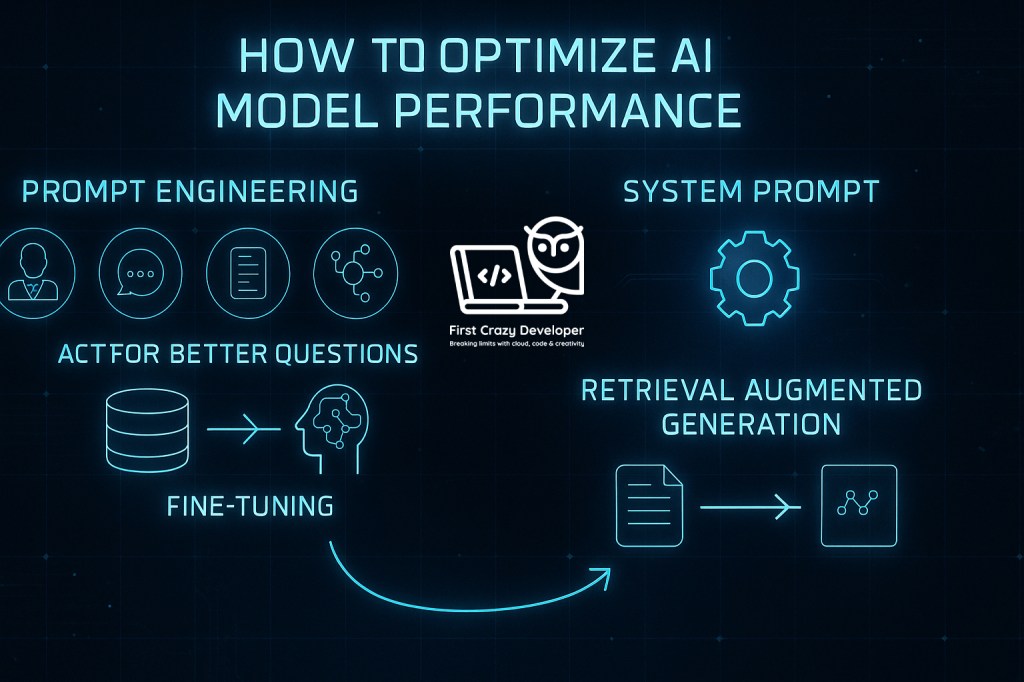

Step 2: Go Beyond Prompts — Add System Instructions

Developers can embed system-level instructions that guide the model’s behavior without exposing them to users.

This helps keep your AI on-brand, accurate, and consistent.

Step 3: Advanced Optimization Strategies

Sometimes, prompt engineering isn’t enough. That’s when you level up with these strategies:

- Retrieval Augmented Generation (RAG):

Imagine your AI consulting a company database or knowledge base before answering.

Example: Employees ask questions about expense policies, and the AI retrieves answers from your actual corporate documents. - Fine-tuning:

Train the model further with examples of ideal prompts and responses.

This makes it more consistent in style and tone.

Pro Tip:

Start with prompt engineering (cheaper, faster), then consider RAG and fine-tuning for long-term reliability.

When to Use What

- Optimize for context:

Use RAG when your model needs access to up-to-date or specialized information. - Optimize for consistency:

Use fine-tuning when your model must deliver answers in a specific tone or style.

In my latest blog, I break down how to supercharge your AI models using:

- Prompt Engineering (better questions = better answers)

- System Prompts (guide your AI behind the scenes)

- Retrieval Augmented Generation (RAG) (make your AI consult real data)

- Fine-tuning (train it to match your style & tone)

Abhishek’s Notes:

Prompt engineering is like teaching your AI how to listen and respond. RAG makes it smarter with real-time knowledge. Fine-tuning makes it sound like “you.” Use them together for the best results.

Leave a comment