by Abhishek Kumar | FirstCrazyDeveloper

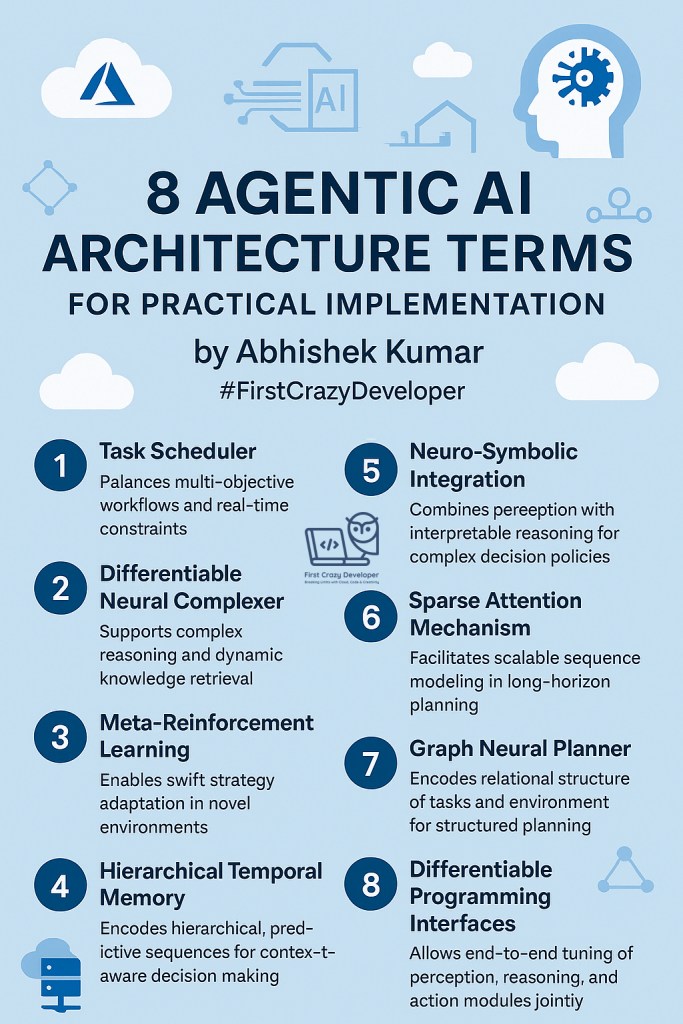

Artificial Intelligence is moving beyond static models. We are now in the Agentic AI era, where systems are not just predicting text or images but acting as autonomous problem solvers. These AI agents combine reasoning, planning, memory, and adaptability to tackle complex, real-world workflows.

But what makes these agents truly agentic?

The answer lies in their architecture—a fusion of advanced neural methods, symbolic reasoning, and planning strategies.

In this blog, we’ll break down 8 essential Agentic AI architecture terms, explain their role, and show you how developers can practically implement them.

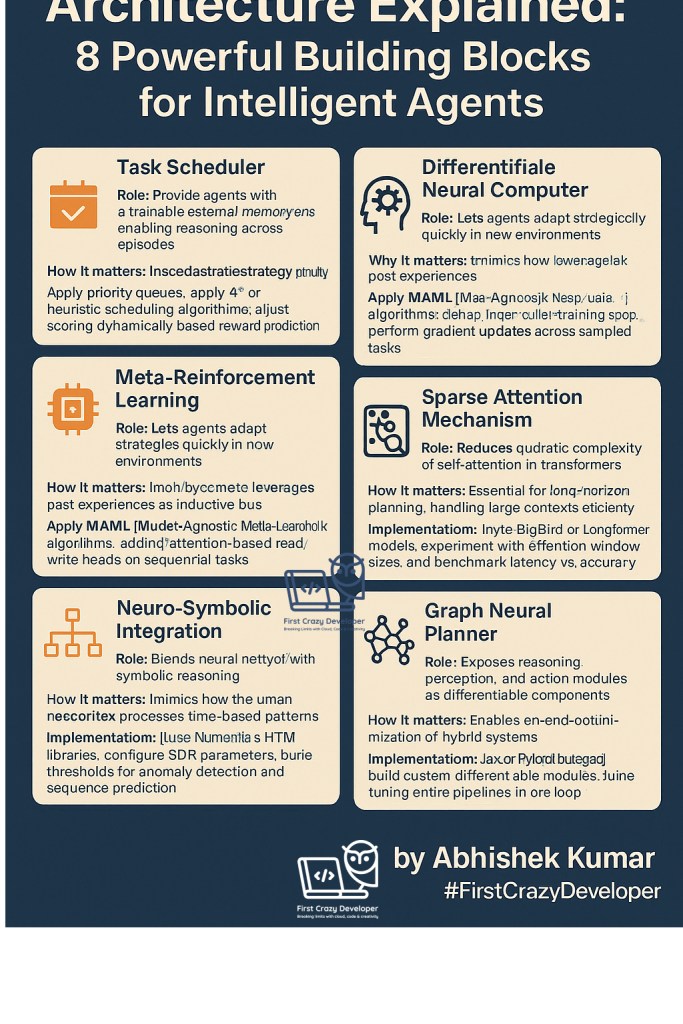

1️⃣ Task Scheduler – The Brain’s Timekeeper

- Role: Decides which task gets priority based on deadlines, dependencies, and overall utility.

- Why it matters: Just like an OS scheduler manages CPU cycles, an AI agent needs a scheduler to balance multi-objective goals in real-time.

- How to implement:

- Use priority queues

- Apply A* or heuristic scheduling algorithms

- Adjust scoring dynamically based on reward predictions

👉 Example: In a warehouse AI agent, the scheduler decides whether to prioritize “restock shelf” over “optimize delivery route.”

2️⃣ Differentiable Neural Computer (DNC) – Memory That Learns

- Role: Provides agents with a trainable external memory, enabling reasoning across episodes.

- Why it matters: Unlike static LSTMs, DNCs can store and retrieve structured knowledge.

- How to implement:

- Combine an RNN controller with a memory matrix

- Add attention-based read/write heads

- Train using TensorFlow or PyTorch on sequential tasks

👉 Think of it as giving your AI agent a “whiteboard” to store intermediate results while planning.

3️⃣ Meta-Reinforcement Learning – Learning How to Learn

- Role: Lets agents adapt strategies quickly in new environments.

- Why it matters: Instead of retraining from scratch, the agent leverages past experiences as inductive bias.

- How to implement:

- Apply MAML (Model-Agnostic Meta-Learning) algorithms

- Define inner/outer training loops

- Perform gradient updates across sampled tasks

👉 Example: A game-playing AI can adapt to a new game much faster by leveraging strategies learned from older ones.

4️⃣ Hierarchical Temporal Memory (HTM) – Inspired by the Brain

- Role: Encodes hierarchical predictive sequences for decision-making.

- Why it matters: Mimics how the human neocortex processes time-based patterns.

- How to implement:

- Use Numenta’s HTM libraries

- Configure SDR (Sparse Distributed Representation) parameters

- Tune thresholds for anomaly detection and sequence prediction

👉 Perfect for agents handling time-series data, like predictive maintenance in IoT.

5️⃣ Neuro-Symbolic Integration – Best of Both Worlds

- Role: Blends neural networks (pattern recognition) with symbolic reasoning (logic & rules).

- Why it matters: Brings interpretability and explainability to black-box AI.

- How to implement:

- Embed knowledge graphs

- Add differentiable logic layers

- Train end-to-end with joint loss functions

👉 Example: An AI doctor can recognize medical images (neural) and also apply clinical rules (symbolic) before making a diagnosis.

6️⃣ Sparse Attention Mechanism – Scaling Long Sequences

- Role: Reduces the quadratic complexity of self-attention in transformers.

- Why it matters: Essential for long-horizon planning and handling large contexts efficiently.

- How to implement:

- Use BigBird or Longformer models

- Experiment with attention window sizes

- Benchmark latency vs. accuracy

👉 Imagine an AI agent planning months-long logistics—it needs sparse attention to efficiently reason over vast timelines.

7️⃣ Graph Neural Planner – AI That Thinks in Graphs

- Role: Represents environments and tasks as graphs of nodes & edges.

- Why it matters: Perfect for planning in relational environments like logistics, supply chains, or social networks.

- How to implement:

- Use NetworkX for graph structures

- Train with PyTorch Geometric

- Optimize on transition graph datasets

👉 Example: A delivery agent planning multi-city routes can use GNNs to model and optimize decisions.

8️⃣ Differentiable Programming Interfaces – Connecting the Dots

- Role: Exposes reasoning, perception, and action modules as differentiable components.

- Why it matters: Enables end-to-end optimization of hybrid systems.

- How to implement:

- Use JAX or PyTorch Autograd

- Build custom differentiable modules (e.g., physics simulators)

- Fine-tune entire pipelines in one loop

👉 Example: A robotics AI where vision (CNNs), physics simulation, and control policies are optimized together.

🔑 Key Takeaway for Developers

Agentic AI isn’t built with a single model—it’s an ecosystem of architectural elements working together:

- Schedulers to prioritize

- Memory systems to reason

- Meta-learning to adapt

- Neuro-symbolic logic to explain

- Graph planners to structure

- Differentiable programming to unify

By mastering these building blocks, developers can move from building static AI models to dynamic, self-improving AI agents ready for real-world applications.

✨ This is just the beginning. Future AI systems will be modular, adaptive, and explainable, and these 8 terms form the foundation of that future.

Leave a comment