✍️ By Abhishek Kumar | #FirstCrazyDeveloper

🧠 Why This Change Matters

Our data pipelines were fast — until they weren’t.

Large joins started crashing memory, inconsistent datetime types caused nightly job failures, and debugging hidden Python logic in Jupyter notebooks became a nightmare.

So, we did something radical.

We banned pandas.

Every transformation now runs in DuckDB, using pure SQL, directly on Parquet files in Azure Blob Storage.

And the results?

Our pipelines are faster, easier to debug, and simpler to maintain.

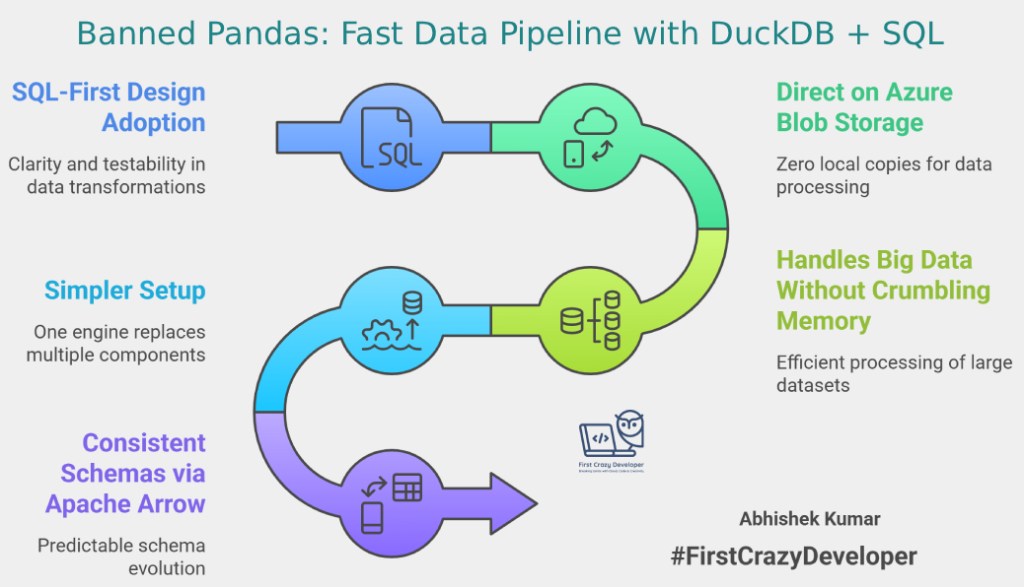

⚙️ 1️⃣ SQL-First Design = Reviewable and Reproducible

Traditional Pandas pipelines scatter logic across multiple scripts and functions.

With DuckDB, every transformation is just a SQL query — easy to review, test, and reproduce.

Example (Pandas ➡ DuckDB)

Before – Pandas

import pandas as pd

orders = pd.read_parquet('orders.parquet')

orders = orders[orders['amount'] > 0]

result = orders.groupby('customer_id')['amount'].sum().reset_index()

After – DuckDB (SQL-Only)

SELECT customer_id, SUM(amount) AS total_amount

FROM 'az://company-data/orders/*.parquet'

WHERE amount > 0

GROUP BY customer_id;

No hidden logic, no scattered functions — just clean, testable SQL.

☁️ 2️⃣ Direct on Azure Blob Storage = Zero Local Copies

DuckDB reads Parquet files directly from Azure Blob Storage using its built-in Azure extension.

No staging, no downloads, no temporary local storage.

INSTALL azure;

LOAD azure;

CREATE SECRET az_conn (TYPE AZURE, CONNECTION_STRING 'DefaultEndpointsProtocol=...');

SELECT *

FROM 'az://company-data/orders/date=2025-10-*/part-*.parquet'

WHERE region = 'EU';

💡 Unlike Pandas, which loads data into memory, DuckDB queries the files in place — even large datasets that exceed RAM.

🧩 3️⃣ Handles Big Data Without Crumbling Memory

Pandas DataFrames live entirely in memory; join two large DataFrames and you’ll often hit OOM.

DuckDB was built differently:

- Columnar storage + vectorized execution

- Predicate & projection pushdown

- Works seamlessly with Parquet and Arrow

- Spills gracefully beyond RAM

SELECT region, SUM(sales)

FROM 'az://company-data/sales/**/*.parquet'

WHERE sales_date >= DATE '2025-01-01'

GROUP BY region;

This can easily scan hundreds of millions of rows without memory errors.

🧱 4️⃣ Simpler Setup = One Engine to Rule Them All

We replaced a multi-layer stack:

pandasfor DataFramespyarrowfor I/OSQLAlchemyfor SQL interfacing- temp SQLite/Postgres for joins

➡️ With just DuckDB + Parquet + Arrow

No extra dependencies, no environment mismatch, no need to maintain multiple engines.

DuckDB handles ingest + transform + export in one consistent runtime.

🧮 5️⃣ Consistent Schemas via Apache Arrow

If you’ve ever fought with Pandas dtype quirks (object vs string, timezone offsets, nullable ints), you’ll love DuckDB’s Arrow-based schema consistency.

DuckDB keeps everything aligned — column names, data types, nested structs — across the pipeline.

✅ Fewer type mismatches

✅ No datetime conversion bugs

✅ Predictable schema evolution

🧠 Real-World Example: Customer Revenue Aggregation

Here’s how a full transformation pipeline now looks.

WITH orders AS (

SELECT *

FROM 'az://data/orders/date=2025-10-*/part-*.parquet'

),

filtered AS (

SELECT order_id, customer_id, amount, order_ts::TIMESTAMP AS ts

FROM orders

WHERE amount > 0

),

enriched AS (

SELECT f.*, c.segment

FROM filtered f

JOIN 'az://data/customers/*.parquet' c USING (customer_id)

)

SELECT segment, DATE_TRUNC('day', ts) AS day, SUM(amount) AS revenue

FROM enriched

GROUP BY 1, 2

ORDER BY day;

This single SQL script performs filtering, joining, and aggregation — without touching a DataFrame.

It’s fully auditable and can be wrapped in automated tests.

⚖️ Decision Matrix: When to Choose DuckDB vs Pandas

| Use Case | DuckDB | Pandas |

|---|---|---|

| Querying Parquet in Azure Blob | ✅ Native support (no local copies) | ⚠️ Requires downloads / in-memory load |

| Big Joins & Aggregations | ✅ Handles larger-than-RAM | 🚫 Crashes on large joins |

| SQL Auditability & Testing | ✅ 100 % SQL based | ⚠️ Hidden Python logic |

| Schema Consistency | ✅ Arrow-based types | ⚠️ dtype mismatch headaches |

| EDA / Feature Engineering | ⚠️ SQL only | ✅ Rich Python ecosystem |

If your workflow centers around data pipelines, not EDA, DuckDB wins — hands down.

🏗️ Migration Patterns (From Pandas to SQL)

| Pandas Pattern | DuckDB SQL Equivalent |

|---|---|

df.query('amount>0') | SELECT * FROM t WHERE amount > 0 |

pd.merge(df1, df2, on='id') | SELECT * FROM t1 JOIN t2 USING (id) |

df.groupby(['region']).sum() | SELECT region, SUM(val) FROM t GROUP BY region |

pd.concat([df1, df2]) | SELECT * FROM t1 UNION ALL SELECT * FROM t2 |

🔍 When You Still Need Pandas (Optional Cases)

Pandas still shines for:

- Quick ad-hoc exploration (small < 1 GB data)

- Integrating machine-learning feature engineering in Python

- Tight loops with NumPy/Scikit-Learn

But if your pipelines live in production — DuckDB’s SQL-first design makes debugging, scaling, and maintenance dramatically easier.

🧩 Final Verdict

We didn’t tune Pandas.

We removed it.

Now, every transformation:

- Runs directly on Azure Blob (Parquet + Arrow)

- Uses only DuckDB SQL

- Is auditable, testable, and reproducible

- Scales from MB to hundreds of GB without memory pain

DuckDB is not just a “smaller database.”

It’s a new paradigm for analytical pipelines — one that fits the modern cloud native data stack perfectly.

✍️ Abhishek Take

If your data workflow starts with “load into pandas,” you’ve already limited yourself to RAM.

If it starts with “run SQL on storage,” you’ve unlocked scalability by design.The best optimization isn’t tuning Pandas — it’s removing it.

🧭 References

- DuckDB Official Docs – Azure Extension & SQL Interface (duckdb.org/docs)

- Apache Arrow Interchange Format Docs

- Pandas Performance Notes & Scaling Guide

- DuckDB Benchmark Tracking Portal

#DuckDB #DataEngineering #SQL #AzureBlob #Parquet #Arrow #BigData #Azure #CloudArchitecture #Python #Pandas #Performance #LinkedInTech #TechCommunity

Leave a comment