✍️ by Abhishek Kumar | #FirstCrazyDeveloper

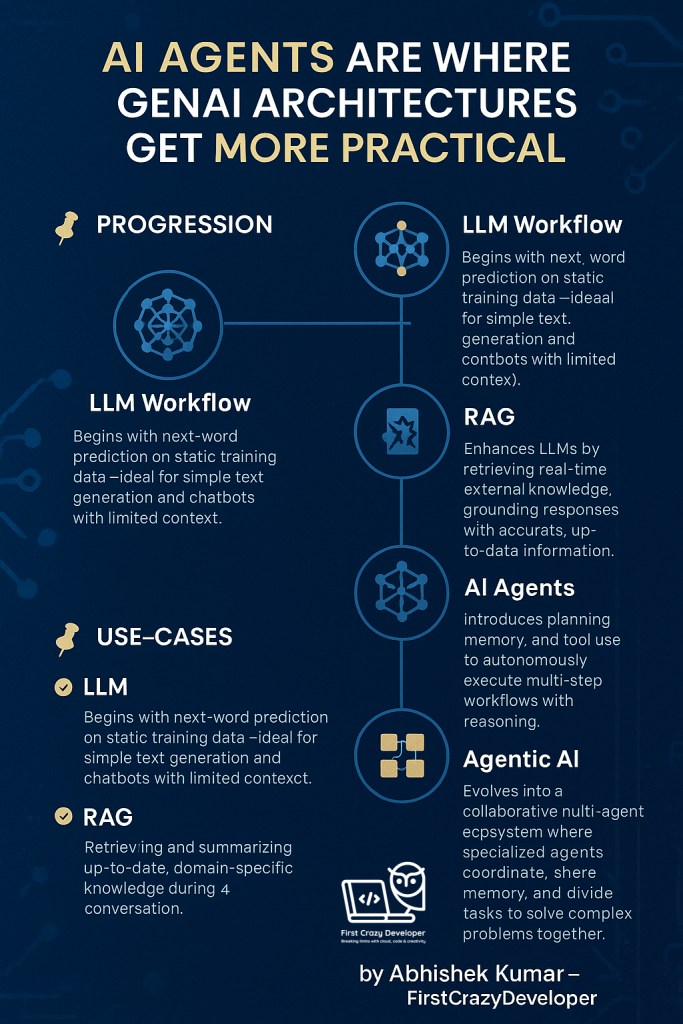

“LLMs talk. RAGs talk smarter. AI Agents act. Agentic AI collaborates.”

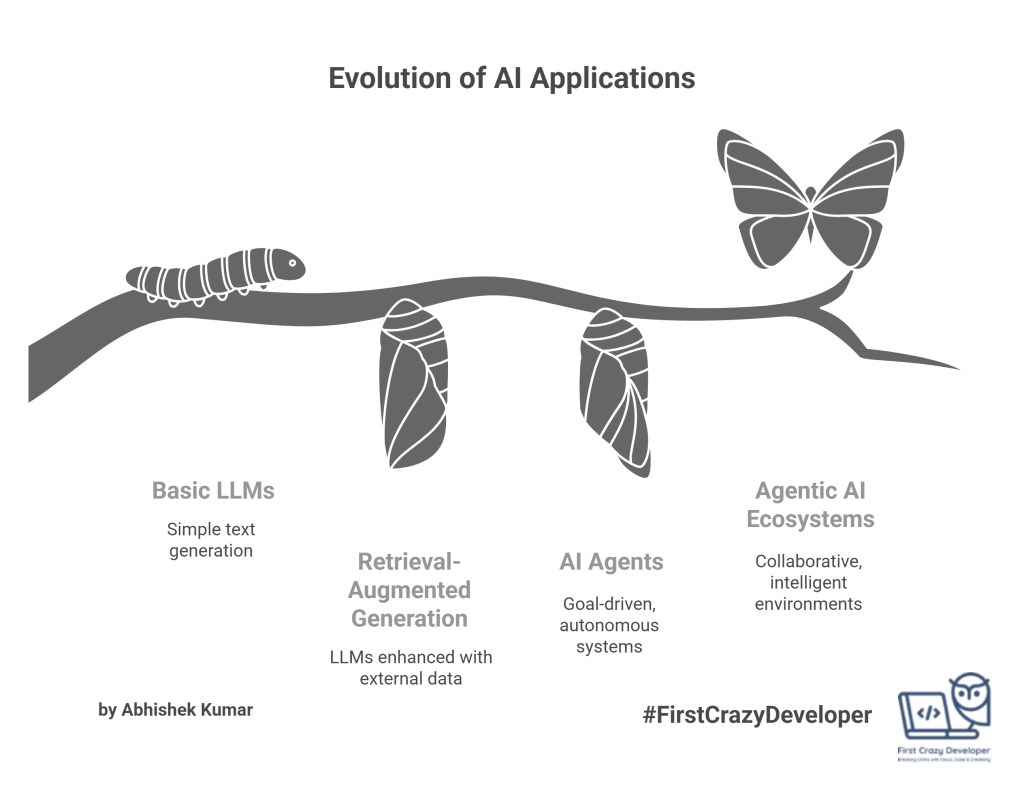

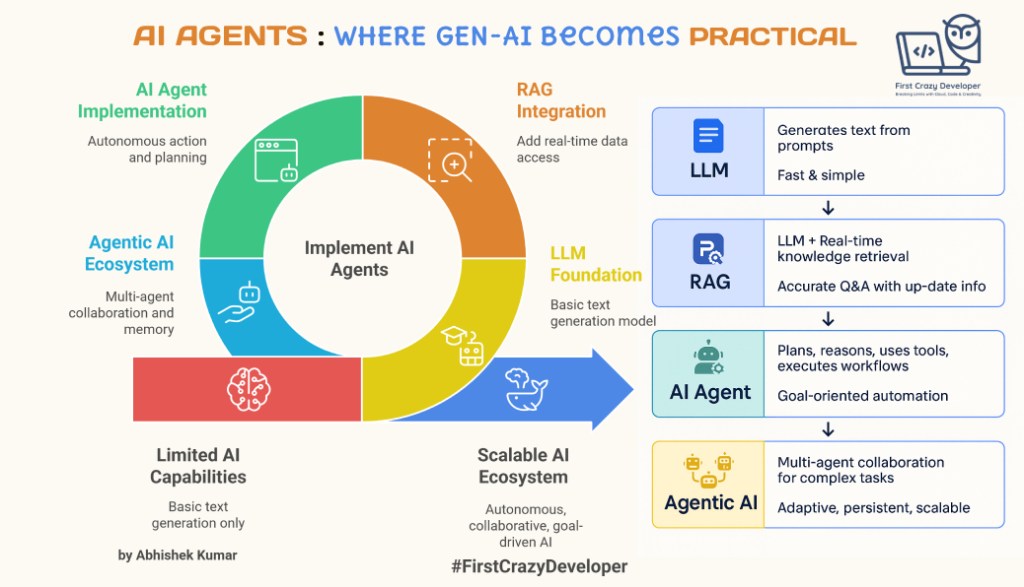

Generative AI is no longer just about writing poems or drafting emails. It’s evolving into intelligent systems that plan, reason, take actions, and even collaborate to solve real-world problems.

This blog is your step-by-step guide to understanding the practical evolution of AI—from simple LLMs to multi-agent ecosystems—and how you, as a developer, can start building at each level.

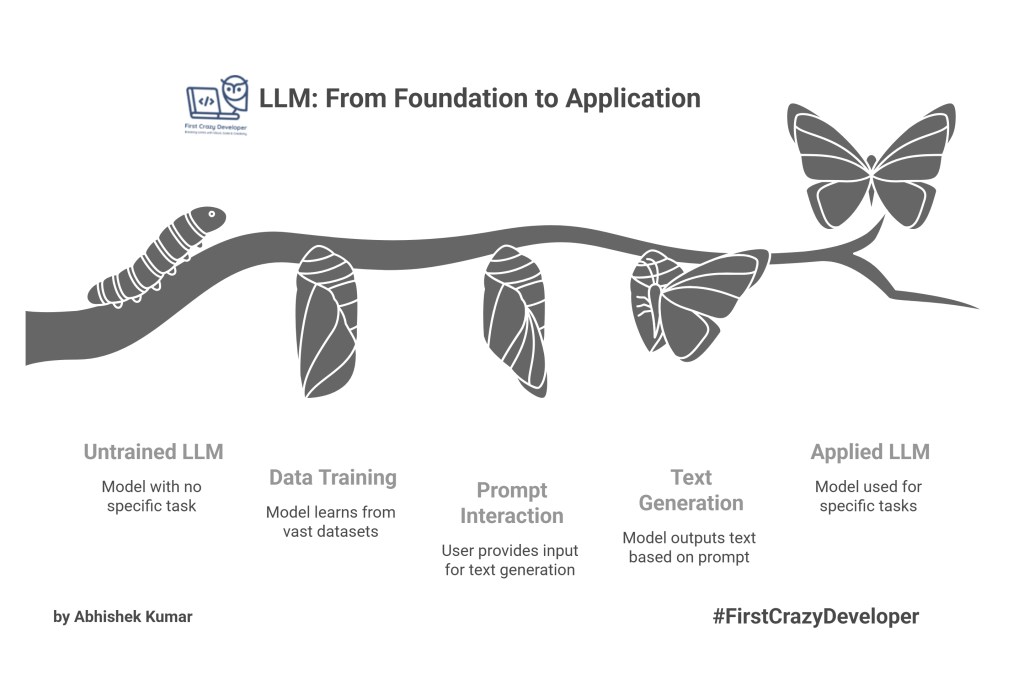

🔹 Stage 1: LLM (Large Language Model) – The Foundation

🔧 What it is:

An LLM, such as OpenAI’s GPT, Google’s PaLM, or Meta’s LLaMA, is a model that generates text based on the data it was trained on. It operates solely on the information it has learned and does not access real-time data or external knowledge sources. The interaction is primarily between you and your prompt.

🛠 Real-world Dev Example:

from openai import OpenAI

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": "Write a thank-you email to a recruiter."}]

)

print(response['choices'][0]['message']['content'])

✅ Best Use Cases:

- Chatbots with basic memory

- Email writing tools

- Creative writing prompts

⚠️ Limitations:

- No external data access

- No memory across sessions

- Can’t “act” — it just “talks”

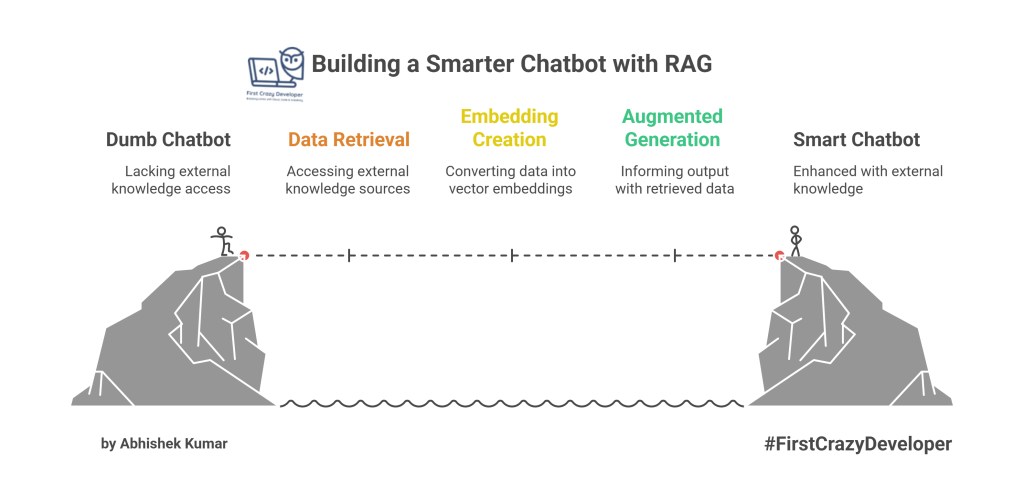

🔹 Stage 2: RAG (Retrieval-Augmented Generation) – The Smarter Chatbot

🔧 What it is:

RAG architecture enhances LLMs by integrating them with external knowledge sources, such as documents, APIs, or databases. This allows the LLM to retrieve real-time data, embed it, and use it to inform the generated output.

🛠 Real-world Dev Stack:

- LangChain or LlamaIndex

- Vector DB like Pinecone, Weaviate, FAISS

- OpenAI embeddings + GPT-4

🛠 Dev Example (LangChain-style):

from langchain.chains import RetrievalQA

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

db = FAISS.load_local("my_documents")

qa = RetrievalQA.from_chain_type(llm=OpenAI(), retriever=db.as_retriever())

print(qa.run("What is the return policy in this document?"))

✅ Best Use Cases:

- Legal assistants fetching laws

- Internal policy Q&A

- Knowledge-based customer support

⚠️ Limitations:

- Quality is data-dependent

- Still not autonomous – no reasoning or action-taking

🔹 Stage 3: AI Agent – Autonomous Action Starts Here

🔧 What it is:

AI Agents go beyond simply answering questions; they take actions. They plan, utilize tools, store memory, and make decisions based on predefined goals. A common pattern is the ReAct agent (Reason + Act).

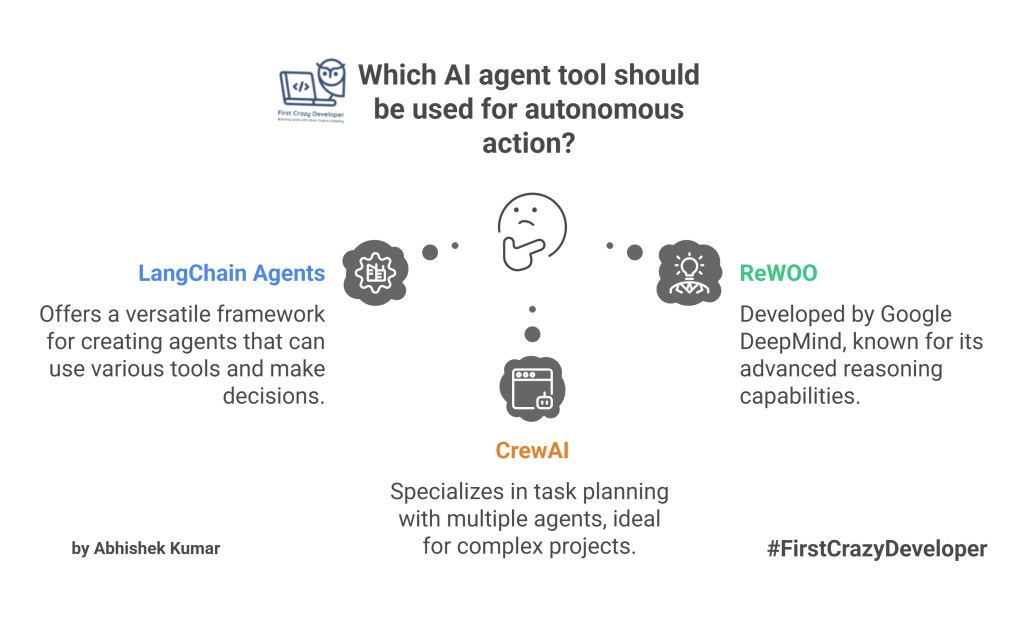

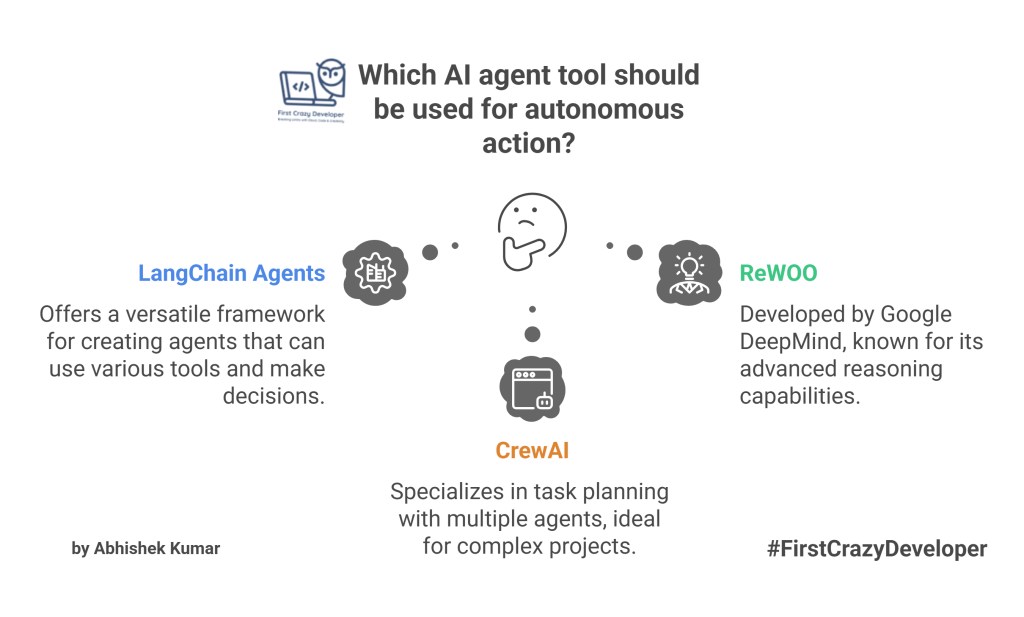

🛠 Real-world Dev Tools:

- LangChain Agents

- ReWOO (from Google DeepMind)

- CrewAI (task planning with multiple agents)

🛠 Dev Example (LangChain Agent):

from langchain.agents import initialize_agent, load_tools

tools = load_tools(["serpapi", "python_repl"])

agent = initialize_agent(tools, llm=OpenAI(), agent="zero-shot-react-description")

agent.run("What’s the latest news about NVIDIA and plot its stock trend this week.")

✅ Best Use Cases:

- Research automation

- Workflow orchestration

- Marketing analysis bots

⚠️ Limitations:

- Needs well-defined tools and tasks

- Logic must be scoped properly

- Not scalable alone for large missions

🔹 Stage 4: Agentic AI – The Scalable Ecosystem

🔧 What it is:

Agentic AI involves a multi-agent system where different agents collaborate, specialize, share memory, and divide tasks to achieve complex goals. It’s akin to a team of AI coworkers.

🧠 Memory | 🔄 Feedback | 🧭 Reasoning | 📡 Communication

Each agent has:

- A role

- A goal

- A communication interface (e.g., message-passing)

🛠 Real-world Example:

Use CrewAI or AutoGen to build teams of agents:

from crewai import Agent, Crew, Task

researcher = Agent(role="Researcher", goal="Collect facts about Microsoft")

analyst = Agent(role="Analyst", goal="Summarize findings and prepare report")

task1 = Task("Gather latest news and GitHub activity on Microsoft", agent=researcher)

task2 = Task("Summarize and generate an infographic plan", agent=analyst)

crew = Crew([task1, task2])

crew.run()

✅ Best Use Cases:

- Project managers with task-specific agents

- Smart R&D assistants

- Strategic game or simulation agents

⚠️ Limitations:

- Complex to design

- Memory control, orchestration, feedback loops are tricky

- Needs multi-agent governance

🔁 Visual Recap: LLM → RAG → AI Agent → Agentic AI

| Stage | Capability | Ideal Use Case |

|---|---|---|

| LLM | Basic text generation | Chatbots, content writing |

| RAG | Text + real-time data | Legal Q&A, document-based support |

| AI Agent | Plans + tools + memory + reasoning | Workflow automation, research bot |

| Agentic AI | Multi-agent collaboration & memory | Project managers, complex simulations |

💡 Which One Should You Use?

- Just starting? → Start with LLMs + RAG

- Building serious tools? → Add AI Agents

- Want autonomous teams? → Explore Agentic AI

🧠 Final Take – Abhishek Take 🎯

“AI Agents are the moment where GenAI becomes useful—not just smart.

Agentic AI is where it becomes scalable—not just impressive.”

If you’re a developer or architect planning real-world AI applications, stop focusing only on prompts. Start designing goal-driven agents that can plan, act, and evolve.

#AI #GenerativeAI #AIAgents #AgenticAI #RAG #LLM #MachineLearning #AbhishekKumar #FirstCrazyDeveloper #AIArchitecture #AIProductDevelopment

Leave a comment